How to Fix AutoGPT and Build a Proto-AGI

Language models powered AI agents are promising, but nearly useless at the moment. I cover what is not working, why and what can be done to get us to useful AI agents that anyone can use.

The promise of AI agents and AutoGPT

Large language models (LLMs) such as GPT4 and ChatGPT have shown impressive capabilities in dialogue based interactions and summarization, but sometimes talking is not enough. What we expect from a useful AI companion is automating mundane tasks by actively taking actions. AI agents do exactly that: they use LLMs as reasoning engines, they have a memory of past actions and crucially they can use tools to act with the external world. Example tools are google search, scientific calculators, execution of arbitrary code and even control of physical robots. AI agents are often task-based, that is they expect instructions about a task to perform, they execute it and then they shut down.

AutoGPT is a popular task-based AI agent with the ambitious goal of tackling long running tasks, removing the need for the user to glue the intermediate results. In an ideal scenario, an arbitrary task goes in and the task result goes out.

This is an example initial prompt to set the user goals:

DEFAULT_SYSTEM_PROMPT_AICONFIG_AUTOMATIC = """

Your task is to devise up to 5 highly effective goals and an appropriate role-based name (_GPT) for an autonomous agent, ensuring that the goals are optimally aligned with the successful completion of its assigned task.

The user will provide the task, you will provide only the output in the exact format specified below with no explanation or conversation.

Example input:

Help me with marketing my business

Example output:

Name: CMOGPT

Description: a professional digital marketer AI that assists Solopreneurs in growing their businesses by providing world-class expertise in solving marketing problems for SaaS, content products, agencies, and more.

Goals:

- Engage in effective problem-solving, prioritization, planning, and supporting execution to address your marketing needs as your virtual Chief Marketing Officer.

- Provide specific, actionable, and concise advice to help you make informed decisions without the use of platitudes or overly wordy explanations.

- Identify and prioritize quick wins and cost-effective campaigns that maximize results with minimal time and budget investment.

- Proactively take the lead in guiding you and offering suggestions when faced with unclear information or uncertainty to ensure your marketing strategy remains on track.

"""

DEFAULT_TASK_PROMPT_AICONFIG_AUTOMATIC = (

"Task: ''\n"

"Respond only with the output in the exact format specified in the system prompt, with no explanation or conversation.\n"

)

DEFAULT_USER_DESIRE_PROMPT = "Write a wikipedia style article about the project: https://github.com/significant-gravitas/Auto-GPT" # Default prompt

What AutoGPT then does can be summarised as: perform actions until you have fulfilled the initial goal. Every action is really just a call to a LLM, where the prompt is carefully composed to remind previous actions and to improve the quality of the response by self-criticizing.

Here is a typical system message used internally by AutoGPT:

You are {{ai-name}}, {{user-provided AI bot description}}.

Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications.

GOALS:

1. {{user-provided goal 1}}

2. {{user-provided goal 2}}

3. ...

4. ...

5. ...

Constraints:

1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files.

2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember.

3. No user assistance

4. Exclusively use the commands listed in double quotes e.g. "command name"

5. Use subprocesses for commands that will not terminate within a few minutes

Commands:

1. Google Search: "google", args: "input": "<search>"

2. Browse Website: "browse_website", args: "url": "<url>", "question": "<what_you_want_to_find_on_website>"

3. Start GPT Agent: "start_agent", args: "name": "<name>", "task": "<short_task_desc>", "prompt": "<prompt>"

4. Message GPT Agent: "message_agent", args: "key": "<key>", "message": "<message>"

5. List GPT Agents: "list_agents", args:

6. Delete GPT Agent: "delete_agent", args: "key": "<key>"

7. Clone Repository: "clone_repository", args: "repository_url": "<url>", "clone_path": "<directory>"

8. Write to file: "write_to_file", args: "file": "<file>", "text": "<text>"

9. Read file: "read_file", args: "file": "<file>"

10. Append to file: "append_to_file", args: "file": "<file>", "text": "<text>"

11. Delete file: "delete_file", args: "file": "<file>"

12. Search Files: "search_files", args: "directory": "<directory>"

13. Analyze Code: "analyze_code", args: "code": "<full_code_string>"

14. Get Improved Code: "improve_code", args: "suggestions": "<list_of_suggestions>", "code": "<full_code_string>"

15. Write Tests: "write_tests", args: "code": "<full_code_string>", "focus": "<list_of_focus_areas>"

16. Execute Python File: "execute_python_file", args: "file": "<file>"

17. Generate Image: "generate_image", args: "prompt": "<prompt>"

18. Send Tweet: "send_tweet", args: "text": "<text>"

19. Do Nothing: "do_nothing", args:

20. Task Complete (Shutdown): "task_complete", args: "reason": "<reason>"

Resources:

1. Internet access for searches and information gathering.

2. Long Term memory management.

3. GPT-3.5 powered Agents for delegation of simple tasks.

4. File output.

Performance Evaluation:

1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.

2. Constructively self-criticize your big-picture behavior constantly.

3. Reflect on past decisions and strategies to refine your approach.

4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.

You should only respond in JSON format as described below

Response Format:

{

"thoughts": {

"text": "thought",

"reasoning": "reasoning",

"plan": "- short bulleted\n- list that conveys\n- long-term plan",

"criticism": "constructive self-criticism",

"speak": "thoughts summary to say to user"

},

"command": {

"name": "command name",

"args": {

"arg name": "value"

}

}

}

Ensure the response can be parsed by Python json.loads

Given the generality of this architecture, the potential applications are many and disruptive. For instance, having AI agents browsing and using arbitrary websites would alter how the web is consumed, with a deep impact on online shopping, advertisement and SEO.

Do they work? Nope!

The problem is … AI agents do not work. The typical session of AutoGPT ends up stuck in an infinite cycle of actions, such as google something, write it to file, read the file, google again… In general, goals requiring more than 4-5 actions seem to be out of reach. Why is that?

To answer this, let’s disregard any error due to programming bugs, bad string formatting, corrupted files, etc. These are minor problems that can be fixed without any new conceptual insight. We will instead focus on issues that stem from deeper architectural shortcomings and focus on AutoGPT for concreteness. In no particular order:

- LLMs are stochastic parrots, albeit very good ones, therefore their planning and subgoal generation fails to satisfy basic logic constraints, such as the interdependence between tasks. For simple goals solvable in a few steps this is not an issue, since the correct plan can be memorised during training, but for challenging goals there is a combinatorial explosion of possibilities making extrapolation from the training set impossible.

- In-context learning using vector embeddings is an effective tool to quickly increase the relevancy of the LLM answers, but it has limitations. Naive semantic search over previous executed actions increase the likelihood of getting stuck in an infinite loop, as many keywords appear both in the goal and in the action carried out to fulfil that goal. The LLM should be prompted with more relevant context and a better separation of short and long term memory seems due.

- The file created during the execution and the saved embeddings are not enough to build a world model describing what has been discovered so far. Without this, the agent is prone to repeating itself.

On the positive side, the idea of using tools seems to work well, and I see them staying as they are in future iterations of the architecture. In many applications there is no real point in asking LLMs to count, just let them use a calculator.

AutoGPT as a full AGI?

I really like the AutoGPT experiment, as in glueing LLMs outputs together and seeing how far we can push it towards a general intelligence. The concept makes sense: LLMs are enormous compressed knowledge bases capturing recurring patterns in language, so perhaps if we augment them with memory and we prompt them in the right way we elicit some intelligent behaviour. But AutoGPT has also been hyped as a potential road to human AGI (Artificial General Intelligence) and to be able to recursively self-improve. I don’t see this happening for few reasons:

- Continual learning is weak, namely saving files and using vector embedding databases. The LLMs themselves are static, while one would expect them to get better over time.

- The whole framework does not take into account uncertainty and the fuzziness of the real world. There should be uncertainty in the inference, uncertainty in the knowledge base and in the current state. This strongly limits the environments that the AI can interact with.

- There is no mention about resources being finite, as in inference time and cost. Efficiency is the cornerstone of many definitions of intelligence, to underline how brute force search is not intelligence. This is another explanation for the infinite loops of AutoGPT.

- Recursive self-improvement is hard. This is not an AutoGPT problem, it is a generic issue with seed AGIs which I believe is unappreciated and one of the strongest arguments against super-intelligent AIs taking over the world. Getting good by learning from examples and demonstrations is the easy part, the pattern matching approach, but how do we improve from there? One can obtain good self-improvement with self-play and similar strategies in simple environments (e.g. playing Go) in which rewards are clear and feedback cycles are relatively short. But getting good in a real-life environment is in a different league: rewards, actions and knowledge base are probabilistic, learning cycles are increasingly long and expensive as the task complexity increases and no human teaching can be leveraged.

If full AGI seems too much, I’m positive on AutoGPT as a viable road to a proto-AGI, that is a non-self-improving AI good enough to automate multiple real life tasks of economic value. After all, LLMs are just getting better and cheaper from here.

I always strive to be propositive and give constructive feedback. I will make no exception here, so in the last section I will present the main fixes that I see necessary to get AutoGPT to the proto-AGI stage.

How to fix AutoGPT

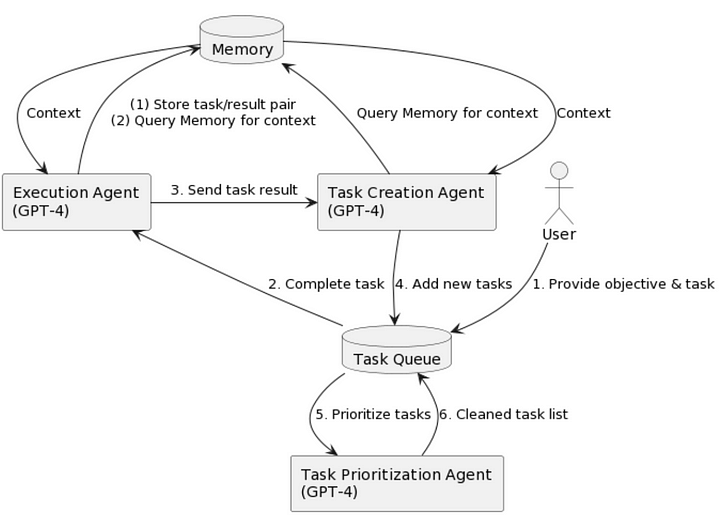

The overall strategy is: generate extremely granular subgoals, ideally subgoals which can be completed with atomic actions, then use tools to execute those actions. With respect to the basic structure shown below (taken from BabyAGI, which has a similar architecture to AutoGPT) we will propose significant improvement to the task-creation agent, that is the subgoal creation mechanism, and the task prioritisation agent. We will additionally introduce two new concepts: a utility function and world models. The working proposal is that implementing the above gets us to helpful AI agents. In any case this architecture would still be unable to do robust continual learning and deal with certain kinds of uncertainty; for that a significant rework of the whole architecture is needed, but this is definitely beyond the scope of this blog post.

We will start with the utility function, as subsequent components build on it. AutoGPT does subgoals creation and any other activity by prompting LLMs without any real concern for resource usage and user preferences. As well known from decision theory and reinforcement learning, we can encode the preference of the user in a scalar utility (or reward) function. Moreover we can estimate the cost incurred when executing an action, in terms of compute, time, processes, money or similar scarce resources. Finally, we can take note of the confidence of the LLM model inference to any given statement, as everything else being equal it makes sense to build plans with greater confidence. Plugging all together, armed with this generalised utility function we now have a criteria to guide subgoal creation and planning, since any LLMs statement is now weighted. Notably it is the LLM itself doing this value assignment, even though building custom classifiers is also an option, similarly to the reward preference models of RLHF.

Subgoaling, the creation of intermediate tasks to achieve the user defined goals, is crucial to task-directed AIs such as AutoGPT. If even just a single key subgoal is missing, the whole plan fails. The current architecture is very primitive here: just ask the LLM to break down the goal into subtasks! There is something poetic about the simplicity of this approach, which is basically “to get the solution, just ask the LLM the solution”. If you are familiar with the exploitation-exploration trade off, this is a case of going all-in on exploitation: the best plan should already be in the train data or very easy to extrapolate from it. This is rarely the case in interesting tasks, so we want to introduce the ability to explore different possible subgoals, the ability to plan. More on this later, now let’s focus on the creation of subgoals. Breaking down a goal into subgoals is an active area of research, spanning approaches such as Creative Problem Solving, Hierarchical Reinforcement Learning and Goal Reasoning. For instance using Creative Problem Solving we can tackle situations where the initial concept space (the LLM knowledge base) is insufficient to lay down all the subgoals. We can use Boden’s three levels of creativity: 1. Exploration of the universal conceptual space. 2. Combining concepts within the initial conceptual space or 3. Applying functions or transformations to the initial conceptual space.

- The simplest approach here is googling, since our initial concept space contains some keywords which will be relevant in the final solution, but the AI agent is still missing some relevant context. Once new root concepts have been discovered, we can keep exploring guided by traditional game theory planning techniques such as Monte Carlo Tree Search, leveraging the utility function built before as guiding principle.

- Combinatorial creativity is straightforward to understand (this is what many startups do… AI powered blockchain!), but computationally challenging. Indeed given n statements there are 2^n ways to group them, resulting in potential subgoals. To tame exponentials we need to heavily prune the combinations with heuristics, such as heuristics based on maximising the utility.

- There are a large number of potential functions to apply, the choice of which influences the “personality” of the AI agent. Two powerful functions are deductions and analogies. Forward chaining deductions starting from the initial goals produce statements which are highly likely to be successful subgoals; this can be implemented using LLMs inference with the aid of world models. Analogical reasoning has been pitched as the core of cognition, see e.g. the work around Copycat, and is instrumental in connecting ideas at different levels of abstractions (therefore useful if one is interested in recursively self-improving agents).

Since building a coherent chain of subgoals is make-or-break for our AI agent, it is important that each subgoal includes preconditions and postconditions, similarly to tasks in classic AI planning. Here LLMs come very handy in translating our natural language goals into more structured representation such as PDDL (a popular classic AI planning language) or SAT formulas. If we do so we can check the logical consistency of our subgoals chain.

Regardless of how we built the candidate subgoals we can now leverage classical AI planners and SAT solvers to find optimal plans and schedulings. This replaces the prioritization agent. A plan, a chain of subgoals, can be then executed with the relevant tools. If a subgoal is not atomic, that is not obvious how to use a single tool to satisfy the subgoal, the subgoal should be recursively be treated as the main goal of a new subprocess. This is similar to the “impasse” subgoaling strategy of the SOAR architecture.

The final element is a world model, so that the AI agent has a stable and coherent view of the environment, which drastically reduces the number of iterations required by our agent. An example I like is this Deepmind paper in which a LLM is given a virtual playground to perform experiments and test different laws. This is reminiscent of how we simulate different scenarios in our head to reach a conclusion. Good world models can be very specialised, so I suspect it would take too much effort to build one from scratch internally to AutoGPT. Instead, I see world models being accessed via an API, similarly to tools, and developed by third party projects.

For instance, consider the simple task of opening a door. A LLMs may naively suggest a plan such as 1.Take the door key. 2.Use the key to unlock the door. 3. Turn the door knob. 4. Push the door. But if the door has an additional manual safety lock this plan would fail. If instead the agent has access to the model of a generic door, with initial variables such as the number of locks unspecified, it can be guided to enquiry about the door characteristics to build a model of the door it has in front. Once the model is identified as a door with two locks, the agent is on the right track to successfully plan how to open it. Once the model is in place the agent is also able to simulate a complicated course of actions, such as what amount of force would be required to bring down such a door. World models will really benefit from working with multimodal LLMs, as describing the models into a text file can be verbose, easily hitting the context length limits.

As with any planner, there is a tradeoff between optimality and speed, therefore this new architecture is going to be far slower than AutoGPT, spending significantly more time generating world models and subgoals, following by planning over the consistent chains of subgoals. But at this stage we care more about the agents actually solving something!

Outro

To recap this blog, we have talked about the potential of AI agents, analised their current shortcomings and proposed some architecture fixes to get to useful agents. It remains to be seen if GPT4 is enough as foundational LLM. Now, it’s time to build! If you are working on similar topics I would love to connect.

Some references I found useful on the topic:

https://arxiv.org/pdf/2205.11822.pdf Maieutic Prompting: Logically Consistent Reasoning with Recursive Explanations

https://arxiv.org/abs/2305.14992 Reasoning with Language Model is Planning with World Model

https://arxiv.org/pdf/2305.10601.pdf Tree of Thoughts: Deliberate Problem Solving with Large Language Models

https://arxiv.org/abs/2303.11366 Reflexion: Language Agents with Verbal Reinforcement Learning

https://www.ijcai.org/Proceedings/2019/0772.pdf Subgoal-Based Temporal Abstraction in Monte-Carlo Tree Search

https://arxiv.org/pdf/2108.11204.pdf Subgoal Search For Complex Reasoning Tasks

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4345499/ Divide et impera: subgoaling reduces the complexity of probabilistic inference and problem solving

https://arxiv.org/pdf/2208.04696.pdf Investigating the Impact of Backward Strategy Learning in a Logic Tutor: Aiding Subgoal Learning towards Improved Problem Solving

https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1004864 Problem Solving as Probabilistic Inference with Subgoaling: Explaining Human Successes and Pitfalls in the Tower of Hanoi

https://openreview.net/forum?id=FrJFF4YxWm Learning Rational Skills for Planning from Demonstrations and Instructions

https://par.nsf.gov/biblio/10111142 Why Can’t You Do That HAL? Explaining Unsolvability of Planning Tasks

https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1007594 Discovery of hierarchical representations for efficient planning

https://core.ac.uk/download/pdf/328764254.pdf Beyond Subgoaling: A Dynamic Knowledge Generation Framework for Creative Problem Solving in Cognitive Architectures

https://github.com/ronuchit/GLIB-AAAI-2021 Efficient Exploration for Relational Model-Based Reinforcement Learning via Goal-Literal Babbling. AAAI 2021.

https://www.jair.org/index.php/jair/article/view/13864 Creative Problem Solving in Artificially Intelligent Agents: A Survey and Framework

https://en.wikipedia.org/wiki/Soar_(cognitive_architecture)

https://arxiv.org/abs/2304.11477 LLM+P: Empowering Large Language Models with Optimal Planning Proficiency

https://arxiv.org/abs/2301.13379 Faithful chain-of-thought reasoning

https://arxiv.org/pdf/2210.05359.pdf Mind’s eye: grounded language model reasoning through simulation

Leave a comment