A pragmatic metric for Artificial General Intelligence

Current intelligence definitions are either partial or do not provide an actionable evaluation of intelligence, something tangible which can be used to quantify the potential of a given AGI. In absence of this definition we commonly define intelligence as the result accuracy of an intelligence test, for instance an IQ test, but this is prone to evaluation overfitting. We review existing definitions and then we consider a new heuristic definition, which we call PGI index or Pragmatic General Intelligence index, which relates intelligence to the ability to perform arbitrary tasks, but in which task accuracy is just one of the factors contributing to intelligence. In a toy model, we compute the PGI Index of an average human and of the Gato AI.

Introduction: Why defining intelligence is hard and the goal of the PGI index

When talking about the intelligence of an artificial general intelligence system we are really referring to the elusive notion of intelligence that we apply to humans, often measured with the infamous IQ test. Indeed the “artificial” bit tells us only how the system is implemented, but the range of tasks on which the system will be judged can be the same for humans and AIs. Given this, while our main interest is to provide a pragmatic metric for artificial general intelligence, we are forced to face the bigger challenge of defining intelligence as applicable also to humans, or infact to any generic system able to carry out tasks. Notice how here we are assuming that such a feat is possible in the first place, following authors such as J. Hernández-Orallo [1].

Intelligence is a prominent member of a very special club: concepts which are at the centre of a huge field of research, yet they don’t have a formal and consensus definition. Members of this club include life, consciousness and string theory. But why is defining intelligence hard in the first place?

A lot of reasons (and for an in depth analysis on the concept of general intelligence I suggest looking at [1], from which we borrow many quotes and literature, and also [2-6]), but fundamentally it boils down to 4 points:

-

We don’t know enough about the internals of the system for which the concept of intelligence was originally created, a.k.a. the brain.

-

Benchmark overfitting. Intelligence, whatever it is, is a moving target and is related to the capability of learning. Benchmarks can therefore be gamed by systems which we would be reluctant to define as general intelligence.

-

The more rigorous definitions, for instance based on algorithmic information theory or probabilistic bayesian inference (e.g. AIXI), are too clumsy to be used in real life if not uncomputable altogether. The more intuitive definitions are instead too vague to be practically used.

-

On a sociological level intelligence is a controversial topic, since it can be abused for discrimination and can have a direct impact on livelihood and income, for instance when used in selection tests.

In the following we will be concerned with an easy-to-estimate evaluation of general intelligence. Since ease of use is more important than mathematical rigour, we will take care of point 3 by using a very lean formalism, in fact a single multiplicative formula whose parameters can be reasonably guessed on the fly. We avoid point 1 or similar concerns by focusing purely on performance metrics on a set of tasks, without care for the internals of the system. Intelligence is, by definition, the result of the intelligence measure test which is composed of a set of tasks. Regarding point 2 we argue that no reliable intelligence measure test can be fixed and the set of tasks needs to include tasks not known in advance. We are immune from the concerns of point 4, since we are focused on a metric for AGIs which only as a byproduct can be used for humans, but that would be too imprecise to evaluate fine differences among humans. Roughly speaking we sacrifice accuracy for ease-of-use, so while at this stage it’s easy to evaluate differences between AGIs, or between AGIs and humans, we expect the differences among humans to be inside the error bars of the formula parameters.

As we will see, the rough idea is the following:

$$ PGII = f(Accuracy) * g(Resource \, Consumption) * h(Generalisation \, Abilities) $$

For some functions f, g, h.

A tool like the PGI Index (or PGII for brevity) can allow entrepreneurs, researchers, investors and policy makers to quickly assess the value of AGI systems. Ideally, it should be possible to estimate the impact of a new AGI system by simply reading the release paper, and being able to compare it with existing systems even if the set of tasks on which the systems have been tested are not exactly the same.

The PGI Index should not be considered as a fully rigorous estimation of intelligence (even though such rigorous metric may be built following similar ideas), but more of a good heuristic. This article has been inspired by the release of Gato [7], which created plenty of confusion in the AI community regarding the actual generality of the system. The PGI index underline how Gato, and any other current AI system, despite super human performance on some tasks, is lacking in key areas which appear unlikely to be improved in the short term, even with access to large amounts of compute.

The PGI Index presented here has to be considered as a version 0.1, to be fine tuned as more AGI architectures become available.

Existing definitions of intelligence

Let’s explore previous definitions of intelligence and intelligence tests. [2] gives a comprehensive overview of intelligence definitions, which can be complemented with a couple of definitions proposed more recently such as in [1] and [6]. Here we will make a selection of the definitions which better encapsulate the concept of intelligence as related to learning in dynamic environments. In no particular order:

“. . . the ability to solve hard problems.” M. Minsky [Min85] “

“the intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalisation difficulty” “intelligence is the rate at which a learner turns its experience and priors into new skills at valuable tasks that involve uncertainty and adaptation” Chollet [2019]

“Intelligence measures an agent’s ability to achieve goals in a wide range of environments.” Legg Hutter [2007]

“Any system . . . that generates adaptive behaviour to meet goals in a range of environments can be said to be intelligent.” D. Fogel [Fog95]

the ability of a system to act appropriately in an uncertain environment, where appropriate action is that which increases the probability of success, and success is the achievement of behavioural subgoals that support the system’s ultimate goal.” J. S. Albus [Alb91]

“Intelligence is the ability to use optimally limited resources – including time – to achieve goals.” R. Kurzweil [Kur00]

Achieving complex goals in complex environments” B. Goertzel [Goe06]

These definitions are intuitive and certainly close to what we mean by intelligence, but how do we actually measure intelligence? Is intelligence a number, a vector, a tensor or what? We need to define an intelligence test, the analogue of the clock for time, or a thermometer for temperature.

The most famous intelligence test is surely the IQ test. It is now pretty uncontroversial that the IQ test is only measuring something correlated with intelligence, indeed simple ad-hoc programs can be built to score well, as done in [8]. I will spend a bit more time on the less known C-test from Algorithmic Information Theory which, even though can be similarly trivialised, can be seen as a step forward in universality since it removes many human biases around which answer is correct. These are typical instances of C-tests [1]:

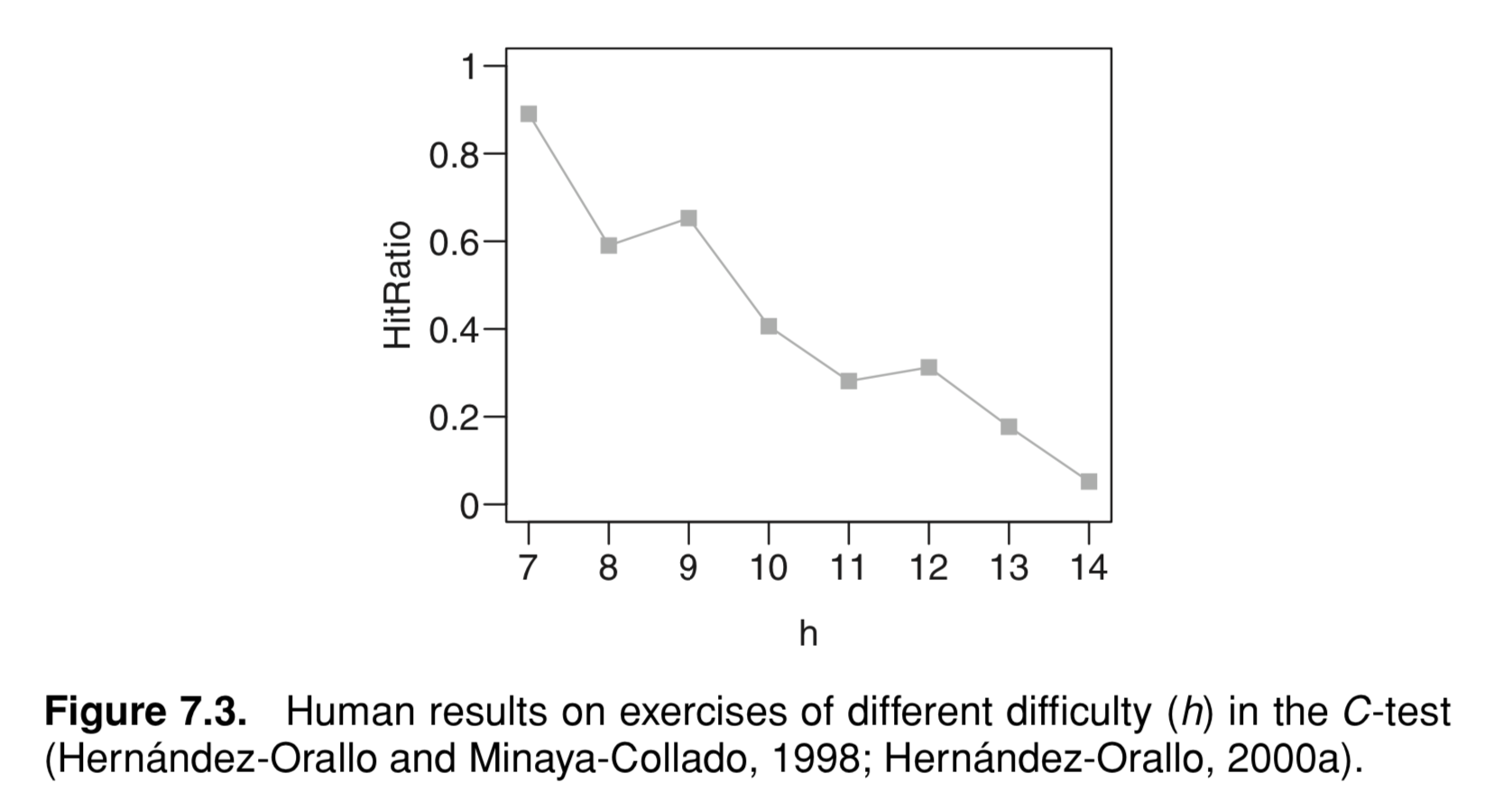

h=7 : a,b,c,d,…

h=8 : a,a,a,b,b,b,c,…

h=9 : a,d,g,j,…

h=10 : a,c,b,d,c,e,…

h=11 : a,a,b,b,z,a,b,b,…

h=12 : a,a,z,c,y,e,x,…

h=13 : a,z,b,d,c,e,g, f,…

h=14 : c,a,b,d,b,c,c,e,c,d,…

The examples are ranked in order of increasing complexity. For each row, try to guess what’s the next letter in the sequence. When you are done, look at the answers below!

Click to see the answers

Answer 7: e Answer 8: c Answer 9: m Answer 10: d Answer 11: y Answer 12: g Answer 13: h Answer 14: dThe C-tests remove biases, since they have been created so that the shortest program that generates the sequence cannot be rivalled by any other program of similar complexity with different series continuation. The answer needs to be of optimal Kolmogorov complexity, and this is universally valid for any kind of agent (humans, AI, etc). Also the complexity, h, is computed algorithmically: it’s the Levin complexity. No time to go too deep here, but in a nutshell the difficulty of a task can be defined as the logarithm of the number of computational steps Levin’s universal search (a meta-search) takes to find an acceptable policy to solve the task, given a tolerance, which can be shown to be proportional to the minimum of length of the policy plus the logarithm of the number of computational steps taken by the policy. Below you can find a survey of results, from a set of 65 candidates aged between 14 and 32 years, which show that h complexity correlates, as expected, inversely proportionally with test results (HitRatio = 1 means all correct answers).

As said, both IQ and C tests can be trivialised by specialised programs. This common problem of intelligence benchmarks is called ‘evaluation overfitting’ (Whiteson et al., 2011), ‘method overfitting’ (Falke nauer, 1998) or ‘clever methods of overfitting’ (Langford, 2005) [1]. If the problems or instances that represent a task (and their probabilities) are publicly available beforehand, the systems will be specialised for the expected cases instead of the generality of the task. To reduce this problem, some authors suggested that the distribution that is used to sample instances should not be known in advance ( the “secret generalized methodology”, Whiteson et al., 2011), but we believe that a stronger statement is needed, namely that not all tasks should be known in advance. Only computers passing also the tasks not known in advance “can be said to be truly intelligent” (Detterman, 2011).

Some authors argued that intelligence is better defined as a vector. Such a vector has been proposed by (Meystel, 2000b) which was asking “whether there exists a universal measure of system intelligence such that the intelligence of a system can be compared independently of the given goals” (Meystel, 2000b). … The recurrent debates … dealt with the distinction between measuring performance and measuring intelligence. The former was defined in terms of the “vector of performance”, a set of indicators of the task the system is designed for. In contrast, the “mysterious vector of intelligence” was “still in limbo”, as it should contain “the appropriate degrees of generalisation, granularity, and gradations of intelligence”.

As said, the PGII is purely a measure of performance and defines (assuming the system is doing it’s best in tackling the tasks) intelligence as the result of those performance tests. Nevertheless, there is a sense in which the “mysterious vector of intelligence” enters into the definition of the PGI Index: the tasks distribution. The tasks on which the system is evaluated needs to be heterogeneous enough to test all “areas of intelligence”. We define a set of tasks as complete if it probes any possible “areas of intelligence”.

To break the circularity of the definition of the tasks distribution we will take, fittingly, a pragmatic approach. Given a set of tasks, we say that it is complete if adding any possible tasks do not significantly change the PGI Index for any given system. On the contrary, every time we find a task which decreases sharply the PGI Index once added to the set of tasks we should include that task into the set of tasks. Intuitively such a task is probing a component of intelligence which the tested system did not need to achieve high PGI scores, but which will be part of the evaluation from now on. We conjecture that a complete set of tasks exists and has a finite and small number of components. We also notice that any set of tasks can only be said to be complete “until proven wrong”.

Thanks to this definition we don’t need to exactly specify what the “areas of intelligence” are, but it’s nevertheless interesting to look at what other authors did. For instance (Adams et al., 2012) “recognise six kinds of scenarios: general video-game learning, preschool learning, reading comprehension, story or scene comprehension, school learning and the ‘Wozniak Test’ (walk into an unfamiliar house and make a cup of coffee). They propose “AGI test suites” such that “the total set of tasks for a scenario must cover all the competency areas” and they must do it in such a way that the tasks are so varied and numerous (or previously unknown) that the “big switch” problem is avoided.” Here the “big switch” problem (Ernst and Newell, 1969) is also called the AI homunculus problem, in which a pseudo general AI may be built simply as an array of specialist models, selected by a switch according to the provided problem. The switch operator is usually an AI researcher.

Adams also mentions one of the possible issues with this approach: “Nonetheless, they advocate for several suites, as they “doubt any successful competition could ever cover the full extent of a roadmap such as this”. Again Adams et al. (2012), defines the competency areas in AGI as:

- Perception

- Attention

- Planning

- Actuation

- Communication

- Emotion

- Building/creation

- Memory

- Social interaction

- Motivation

- Reasoning

- Learning

- Modelling self/other

- Use of quantities

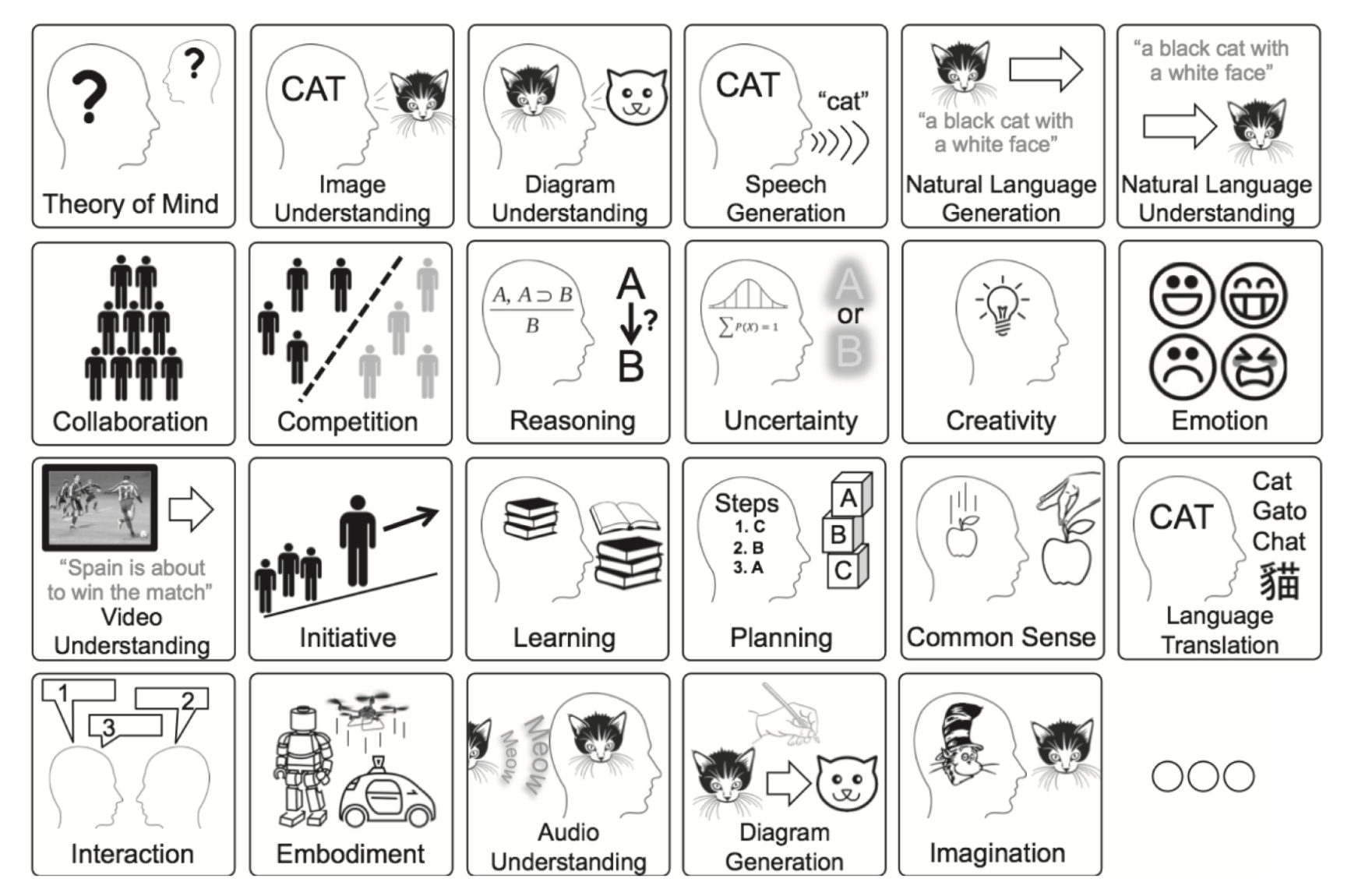

and encourages “I-athlon events” (Adams et al. (2016)) to reliably test AGIs. In the below picture, some proposed areas to be covered in the I-athlon events.

It’s worth mentioning the famous g-factor of intelligence at this point and clarify that the PGI index, despite being the distillation of intelligence to a single value, is diametrically opposed, since the g-factor is an hidden value that only correlates with the performance on intelligence tests, but which is not computed from them.

PGI Index

The rough idea for the PGII is the following:

$$ PGII = f(Accuracy) * g(Resource \, Consumption) * h(Generalisation \, Abilities) $$

Here we take f = ^2, g = vector sum, h = product sum.

so:

$$ (Accuracy ^ 2) * sum(Resource \, Consumption) * prod(Generalisation \,Abilities) $$

Given a set of tasks, the PGII is given by the average accuracy over those tasks, multiplied by two factors. The first is taking into account how much resources are needed to achieve that accuracy. The second tells us how much performance degrades when new unseen tasks are added and environments are changed, basically how general the agent is. Since accuracy is ultimately king, it is squared to avoid that efficient and general inept agents score too high w.r.t. specialised but effective agents.

Now let’s give the actual recipe to compute the PGII. This should be considered as 0.1 version of the PGII: ultimately the weights given to the various components are arbitrary, only time and enough evaluations will tell us how to tune these components to deliver a satisfactory definition of intelligence and avoid anomalies.

Step 1: Accuracy

This is pretty easy, it is the standard average accuracy reported in the AI evaluation in literature, in percentage. Sometimes, as in the Gato paper, it can be given as a percentage of the score of the task demonstration experts, where 100% corresponds to the per-task expert and 0% to a random policy. In this case the most performant agent can be taken to have a reference 1.0 performance. To be evaluated on a set of tasks known in advance.

Step 2: Resource Consumption

Assign a number from 0-10 to every relevant resource used, then take the sum of all the resources. Here 0 means that the agent is using a huge amount of resources, while 10 it means that the tasks are solved with nearly the theoretical minimum amount of resources. Examples of resources are: number of task demonstrations, time allocated to solve the tasks, energy, computations, memory, materials, etc. For a given agent only a subset may be relevant.

To be concrete this is how the score could be defined:

- Number of demonstrations or amount of supervised data.

- 0 = The agent is given 10B+ demonstrations.

- 1 = The agent is given <100M demonstrations, related.

- 2 = The agent is given <100M demonstrations, unrelated.

- 3 = The agent is given <1M demonstrations, related.

- 4 = The agent is given <1M demonstrations, unrelated.

- 5 = The agent is given <10k demonstrations, related.

- 6 = The agent is given <10k demonstrations, unrelated.

- 7 = The agent is given <100s demonstrations, related.

- 8 = The agent is given <100s demonstrations, unrelated.

- 9 = The agent is given <10 demonstrations.

-

10 = The agent is given no demonstrations.

Where we considered the case of data related to the tasks, such as explicit labelled images for object detection, and unrelated, for instance all the learnings accumulated by a human during his/her life. For other resources we can define:

- Time to complete the tasks: 0= Years, 10 = Nanosecs

- Energy used: 0 = Giga Watts, 10 = Watts

- Computations performed: 0 = ZettaFLOPS, 10 = FLOPS

- Memory used: 0 = Zettabytes, 10 = Kbs

- Other resources (e.g. materials, food))

If a different number of resources is considered between two AGIs make sure to normalise to the same max value for a meaningful comparison.

Step 3: Generalisation Abilities

Step3 requires a second set of unknown tasks on which the agent is evaluated again. Moreover the new set of tasks needs to include new environments and modalities, for instance if the first set of tasks only involved text summarisation tasks and videogames, the second set can include real world robotic tasks and logic. For each new average accuracy we compute the performance w.r.t. to the accuracy on the first set of tasks. Finally, the agent performance is computed (or more realistically only extrapolated) when tasks and environments are varied arbitrarily, to avoid large hardcoded architectures to score too high. For a steady or increasing performance we therefore assign a value of 1, while for instance for a performance drop of 30% we would assign 0.7. Finally we multiply all these factors together.

To recap, compute the 2 factors for the following situations:

- New tasks environments are very different and tasks cover novel areas.

- Tasks are added arbitrarily over time.

Some rationale behind these choices:

- To eliminate tasks overfitting the number and kind of tasks cannot be static, the system must be exposed to new tasks he never trained on to measure its intelligence.

- Looking at the performance drop implies that the initial set of tasks must already be of high difficulty, otherwise the fall in performance is very sharp.

- Taking the multiplication of these factors imply that the PGII gives a lot of weight to generalisation abilities.

It is not allowed to inject training data after the tasks reveal, but AGIs are allowed to learn what they need in unsupervised fashion after the reveal and are shown a few demonstrations as part of the tasks outline.

An example: Average Human vs Gato

As a fun example, let’s compute the PGII of an average human and Gato with some guesswork. The tasks on which Gato has been evaluated are certainly not comprehensive, but they do touch on a decent number of areas of intelligence, so even if the task distribution is not ideal we can perform the 3 steps above. Comparing the indexes for two agents is supposed to be robust to noise in the estimate of the parameters, so we will take some shortcuts to estimate the previously defined parameters (the whole point is that it should not take much effort to compute the PGII!)

Step1

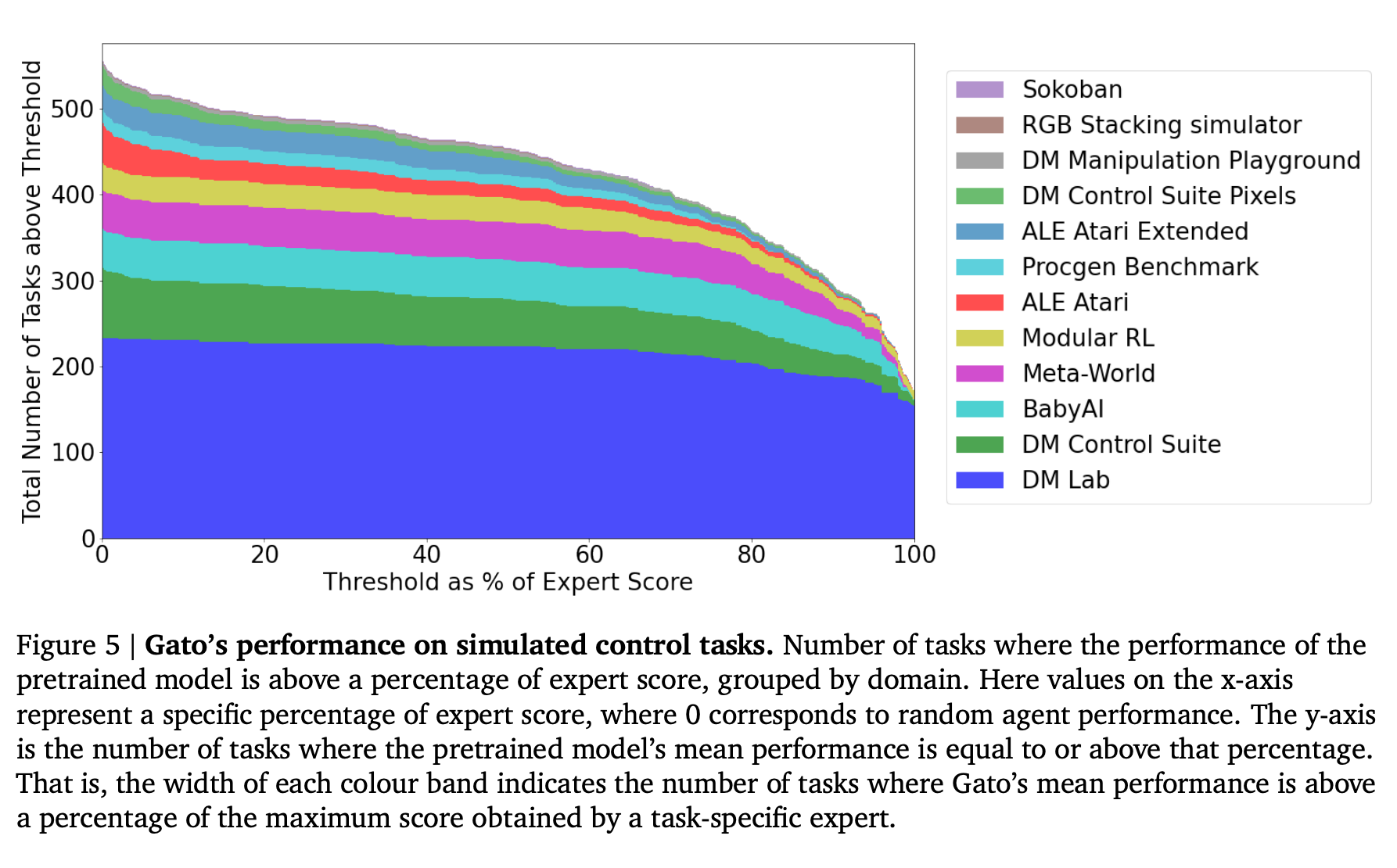

Gato average accuracy is not reported, but we know that it performs over 450 out of 604 tasks at over a 50% expert score threshold. Since the tasks are relatively mundane for humans, we can approximate and say that 50% expert score is the performance of an average human. This means that Gato is performance-wise beats the average human 450 to 154. If we assign a performance score of 1.0 to Gato, then a human gets 154/450 = 0.34. This is probably too severe for humans, as the average accuracy would show less difference, but we will stick with it. Below a picture of Gato tasks together with Gato scores.

$$ Human: 0.34 $$ $$ Gato: 1.0 $$

Step2

Gato is trained on 63M demonstrations, all related to the tasks to be solved. So Gato takes a 1 here. It is controversial what we should consider for the general human, but let’s be conservative and consider that our lifetime is filled with many unrelated demonstrations that contribute to our ability to solve tasks such as playing video-games, a.k.a. Common sense. If we consider a demonstration per minute, the number of demonstrations of an adult is also 10s of millions. Humans take a 2 here. Memory-wise, Gato is 1.2 B parameters model, so about 10 GBs, while a human brain is suspected to have the analogue of few PetaBytes. We will assign 5 to Gato and 2 to humans. Energy consumption is slightly in favour of humans, with about 100W consumption of a human body, vs 100+W for a desktop PC. Since Gato is executed on powerful servers to run in reasonable time, we will give 8 to humans and 6 to Gato. Time and computations to complete the task are not that relevant here, so we will omit them for simplicity.

$$ Human: v=(2, 2, 8) $$ $$ Gato: v=(1, 5, 6) $$

Step3

Gato’s paper shows how the AGI struggles to transfer learning to out-of-distribution tasks, even if the tasks are performed in a similar environment. As a set of unseen tasks we could hypothetically consider the following sets:

- ARC

- Reading a book and later answering questions about events in the plot.

- Raven Matrices

- Turing Test (or for humans the Reverse Turing Test)

- Cooking an arbitrary dish on request and obtaining a positive review.

- IMO Grand Challenge.

- Bongard Problems.

Such a set, even if small, seems to cover nearly all areas of intelligence and could be a good candidate to be a complete set of tasks.

With some knowledge of the current deep learning generalisation limitation we can assign 0.1 to Gato for completely new unseen tasks covering novel areas such as the one listed above (90% drop) and 0.05 for arbitrary new tasks. To humans we assign 0.6 and 0.5 instead, assuming little familiarity with the unseen tasks; the performance drop for humans is also significant, but is not disastrous since the average human is by definition average over many tasks.

$$ Human: u=(0.6, 0.5) $$ $$ Gato: u=(0.1, 0.05) $$

The final result:

$$ Human \, PGII = (0.34^2) * (2 + 2 + 8) * 0.6 * 0.5 = 0.42 $$ $$ Gato \, PGII = (1.0^2) * (1 + 5 + 6) * 0.1 * 0.05 = 0.06 $$

Over a theoretical maximum of 30. Notice how Human > Gato, even though Gato accuracy is superior to average humans on the initial set of tasks.

Pro and Cons

Some useful characteristics of the PGII:

- Task accuracy is just a single component of the evaluation of intelligence, resource consumption and generalisation are also taken into account.

- Intuitive, can be roughly estimated by looking at the AI architecture and working principles.

- Based on tasks performances, so independent of the internals of the agent (no need to worry about the Chinese room dilemma).

- Weakly dependent from the set of tasks chosen, assuming that the tasks are comprehensive enough between initial tasks and unseen tasks. So different AIs on different sets of tasks can in principle be compared.

- Being not specific to any set of tasks, it can apply to any entity: humans, AIs, superAIs, animals.

Some open questions, observations and shortcomings:

- The PGII requires a lot of tasks to be fine grained, which may be cumbersome in practice. What’s the minimum set which provides a reliable measure?

- Adding arbitrary tasks over a long time is a hard requirement. For precision tests, a practical implementation could be programmatically generating tasks, such as in Procgen.

- In [3] a list of desirable properties of an intelligence definition are listed: Valid, Meaningful, Informative, Wide Range, General, Unbiased, Fundamental, Formal, Objective, Universal and Practical. Assuming a complete set of tasks is chosen, the PGI index ticks these boxes, apart from being fundamental. For practical purposes we may not care about it being universal, and limit ourselves to human-relevant tasks and human-scale resource consumption ranges.

- Perhaps it would be more appropriate to call the PGI test a meta-test, since it includes intelligence tests as subroutines and looks at performance and change in performance as the tests/tasks are varied.

- Even if intuitive, reducing intelligence to an aggregate number may be an oversimplification.

References

For a full list of references you can refer to [1].

[1] J. Hernández-Orallo, The measure of all minds: evaluating natural and artificial intelligence, Cambridge University Press, 2017.

[2] S. Legg and M. Hutter, A collection of definitions of intelligence, Frontiers in Artificial Intelligence and applications, 157 (2007),

[3] S. Legg and M. Hutter, Universal intelligence: A definition of machine intelligence, Minds and Machines, 17 (2007), pp. 391-444. https://arxiv.org/pdf/0712.3329.pdf

[4] P. Wang, On Defining Artificial Intelligence, Journal of Artificial General Intelligence, 10 (2019), pp. 1-37.

[5] On the Differences between Human and Machine Intelligence https://scholar.google.com/citations?view_op=view_citation&hl=en&user=0_Rq68cAAAAJ&sortby=pubdate&citation_for_view=0_Rq68cAAAAJ:C33y2ycGS3YC

[6] On the Measure of Intelligence, https://arxiv.org/abs/1911.01547

[7] Gato Paper, https://www.deepmind.com/publications/a-generalist-agent

[8] https://users.monash.edu/~dld/Publications/2003/Sanghi+Dowe_IQ_Paper_JIntConfCogSci2003.pdf

Leave a comment